Neural networks

Here is an unsoliticed and clueless opinion piece on AI. In other words, a bunch of nonsense. Enjoy!

Analogy

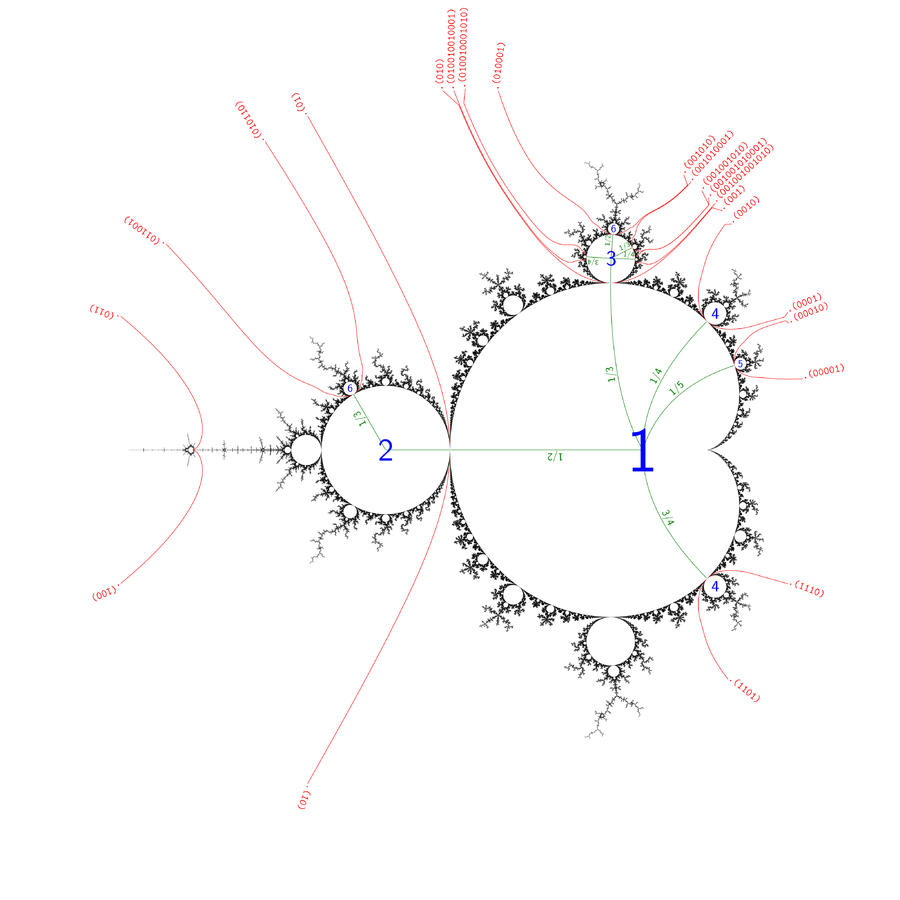

Imagine a civilization that discovers the Mandelbrot set picture at infinite detail, without any realization of the mechanism that produced it. It’s mesmerized by the beauty and complexity of the set. It produces works of art, culture, and science, based on these images. It wants more: to be able to generate their own Mandelbrot-like sets. What does this civilization do?

It creates a whole field of science for finding the relations between different parts of the set. Thousands of individuals measuring, comparing, reproducing simple subsets. Maybe even establishing the foundation of “fractal” as something that has versions of itself found at different scales. It manages to produce pictures similar to different parts of the Mandelbrot set. This ability kicks off a wave of incremental technological advancements that changes their society. Big corporations are formed around this tech, offer countless new products and services. But at the end of the day, how close would they get to actually discovering the equation?

Neural Networks

This is what the modern AI field looks like to me. It’s an endless path to replicate some aspects found in naturally developed organisms (e.g. genetic algorithms) and neural systems (deep learning). It’s not getting us closer to the root: a hypothetical mechanism that not only leads to intellect (whatever it is), but perhaps even to life itself.

It’s easy to see why we as humanity are drilling the scientific rig into this. We observe how biological systems work (genes and neurons), and we get inspired from them. The task becomes fairly well defined: just pattern match based on a ton of data, and do it well! The alternative I’m hinting at is just too hard to pinpoint. If we aren’t doing neural networks, then what exactly? Nobody wants to work on a problem, which they can’t even define what space it’s in (generally, but there are exceptions). And finally, there are clear wins from drilling at this level: we can create smarter systems helping people.

My point being that the neural network level is a very high emergent property of self-organized matter. We need to go lower, and to do this we’ll first need to study the emergence itself. If we understand how emergent properties are formed, maybe we can reverse-engineer them better to dig the lower levels out. Or maybe this cypher of the universe is uncrackable, but at least we’d be able to build our own pyramids of emergent behaviors that expose interesting and useful properties to us.